- Pro

Why bias has become the new form of technical debt in AI

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Shutterstock)

(Image credit: Shutterstock)

- Copy link

- X

- Threads

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Contact me with news and offers from other Future brands Receive email from us on behalf of our trusted partners or sponsors By submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.You are now subscribed

Your newsletter sign-up was successful

An account already exists for this email address, please log in. Subscribe to our newsletterBias isn’t just an ethical flaw in AI - it’s a cost center hiding in plain sight. Every few months a high-profile failure proves the point. But the real issue isn’t that AI sometimes behaves unfairly; it’s that biased automation quietly accumulates operational risk, reputational damage, and rework. That’s the very definition of technical debt.

Across digital services, we increasingly see how small, biased decisions compound over time, distorting customer journeys and forcing businesses into cycles of expensive correction. The ethical debate matters, but commercial consequences are becoming far harder to ignore.

Alicia SkubickSocial Links NavigationChief Communications Officer at Trustpilot.

The recent Workday lawsuit alleging discriminatory screening practices is one example. Customer service is another area where biased or blunt automation shows its limits. Sensitive scenarios - bereavement, fraud, complaints, major life events - routinely expose where AI fails to read emotional weight of the situation. Instead of reducing friction it can amplify it.

You may like-

AI blindness is costing your business: how to build trust in the data powering AI

AI blindness is costing your business: how to build trust in the data powering AI

-

Harmonizing AI innovation with cost, risk & ROI

Harmonizing AI innovation with cost, risk & ROI

-

Why so many businesses are still on the wrong side of the AI divide

Why so many businesses are still on the wrong side of the AI divide

People report being looped endlessly through chatbots, or facing automatic responses that miss the point entirely. We regularly see reviews across industries where automation escalates frustration rather than resolving it - especially when a customer clearly needs judgement, empathy and discretion.

One reviewer recently described being “stuck in a cul-de-sac” while trying to close an account after a family death - a phrase that captures how quickly trust frays when systems aren’t designed for real human context.

But ethics are only the surface story. The bigger, more expensive problem is the technical debt created when biased systems are deployed too fast and left unmonitored - hidden liabilities, compounding costs, and clean-up work shoved into the future.

Rush to deploy becomes rush to repair

Businesses have rushed to implement AI systems across their infrastructure, driven by promises of efficiency, and cost savings. But in that rush, many systems aren’t simply ready. In the Workday example, what was intended to streamline hiring became a source of legal risk and reputational backlash.

Are you a pro? Subscribe to our newsletterContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Poor AI-led experiences have broader ramifications; lost customers, higher service load, lower conversion. And the commercial impact is already visible. Research commissioned by Trustpilot from the Centre for Economics and Business Research (Cebr) found that while consumer use of AI in ecommerce is climbing fast, the most common use cases - such as chatbots - are frequently driving negative experiences.

The ripple effect is real. A single poor AI interaction leads people to tell, on average, two others - multiplying the impact. Over the past year alone, £8.6 billion of UK ecommerce sales were estimated to be put at risk because of negative AI experiences, equivalent to around 6% of the total market of online spending.

If those negative experiences continue, that liability compounds, bias embeds itself into service flows, increasing churn, lowering conversion, and making each subsequent customer touchpoint more expensive to fix. Instead of making cost savings, businesses walk straight into sunk costs and unplanned liabilities.

You may like-

AI blindness is costing your business: how to build trust in the data powering AI

AI blindness is costing your business: how to build trust in the data powering AI

-

Harmonizing AI innovation with cost, risk & ROI

Harmonizing AI innovation with cost, risk & ROI

-

Why so many businesses are still on the wrong side of the AI divide

Why so many businesses are still on the wrong side of the AI divide

Inclusion as a preventative measure

Anyone who has ever tried to repaint a room without moving the furniture knows how hard it is to fix the foundations once everything is already in motion. Fixing AI is no different. Fixing systems systems after rollout is one of the most expensive forms of rework in technology (reaching between tens to hundreds of millions according to Statistica). Once bias is embedded in datasets, prompts, workflows or model assumptions, it becomes incredibly difficult, and costly, to unpick.

And here’s the uncomfortable truth - instead of streamlined operations, organizations are discovering a pile-up of unbudgeted costs - from retraining models and legal fees, to regulatory clarifications and the painstaking work of rebuilding customer trust. What starts as a shortcut quickly becomes a structural weakness that absorbs budget, time and credibility.

Designing and testing AI with teams that reflect the diversity of your customers isn’t a nice-to-have - it’s the most reliable way to prevent bias entering the system in the first place. The question for leaders now isn’t whether bias exists in their systems - it’s whether they’ve built the oversight to find it before customers do.

Three actions to prevent AI bias debt

There are practical ways for businesses to protect themselves from incurring this debt:

Executive ownership of AI outcomes: Bias and fairness must be treated as core performance issues, not side-of-desk ethics tasks. Someone at the exec level must own AI outcomes and publish clear success metrics - whether that sits in product, tech, risk, or a shared governance model.

Diversity at development and testing stage: Build teams that reflect your current and future users. A wider range of lived experiences reduces blind spots at the exact moments where automation tends to break. If using third-party tools, interrogate suppliers on how they identify and mitigate bias.

Continuous monitoring and human oversight: Bias shifts over time. Models that were fair six months ago can drift. Regular auditing, demographic stress-testing and user feedback loops keep systems honest. And human judgement - from teams trained to spot escalation patterns - is the final safeguard against small issues becoming systematic failures.

Fairness as performance infrastructure

The next phase of AI maturity will reward companies that treat fairness as performance infrastructure - not as a compliance checkbox.

Bias behaves like technical debt and it compounds quietly, slows innovation, increases costs, and erodes trust long before leadership notices. No team sets out to build a system that trips people up - but the combination of pace, pressure and patchy oversight makes it surprisingly easy for blindspots to slip through.

Businesses that build fairness in from day one will scale faster, comply faster and spend dramatically less on clean-up. Those that don’t will find that the real cost of bias isn’t ethical - it’s financial, operational and reputational.

Fairness isn’t abstract. Customers feel it instantly - in the tone of an automated message, in how long it takes to reach a human, in whether the system seems to understand what they’re actually asking for. In AI, getting it right isn’t just the moral path, it’s the cost-saving one.

Check out our list of the best AI tools.

TOPICS AI Alicia SkubickSocial Links NavigationChief Communications Officer at Trustpilot.

View MoreYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more AI blindness is costing your business: how to build trust in the data powering AI

AI blindness is costing your business: how to build trust in the data powering AI

Harmonizing AI innovation with cost, risk & ROI

Harmonizing AI innovation with cost, risk & ROI

Why so many businesses are still on the wrong side of the AI divide

Why so many businesses are still on the wrong side of the AI divide

The ROI blueprint: turning AI and automation into business value

The ROI blueprint: turning AI and automation into business value

How to take AI from pilots to deliver real business value

How to take AI from pilots to deliver real business value

From curiosity to culture: Advocating for AI

Latest in Pro

From curiosity to culture: Advocating for AI

Latest in Pro

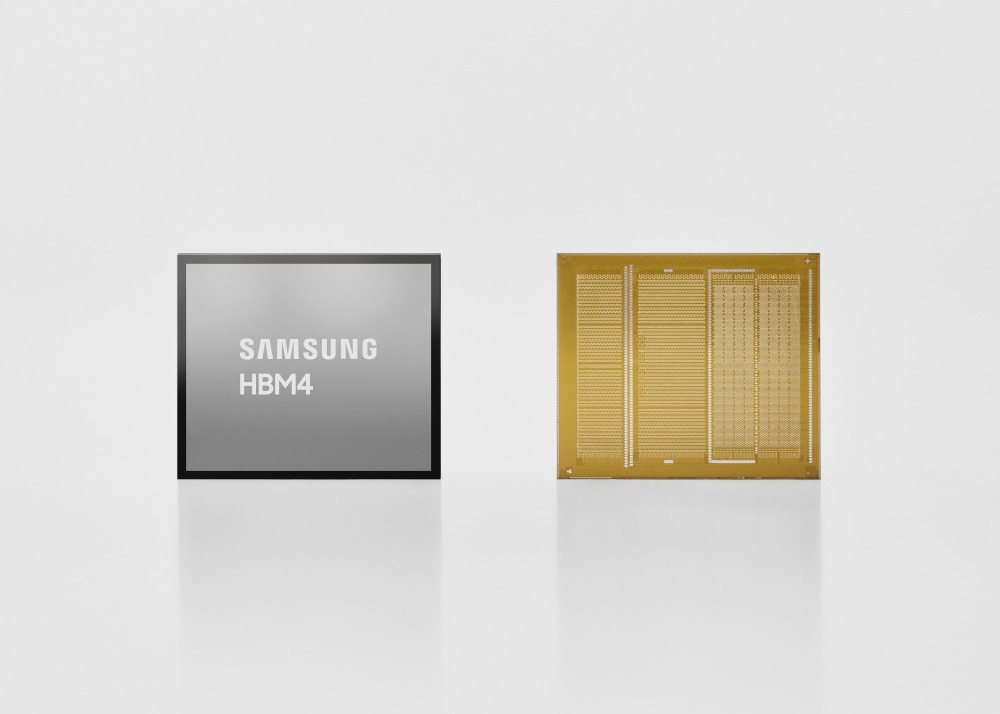

Samsung says it “took the leap” with HBM4, as it starts shipping faster AI memory built on advanced process nodes

Samsung says it “took the leap” with HBM4, as it starts shipping faster AI memory built on advanced process nodes

Dynabook Tecra A65-M business laptop review

Dynabook Tecra A65-M business laptop review

Forget zero-days - 'N-days' could be the most worrying security threat facing your systems today, here's why

Forget zero-days - 'N-days' could be the most worrying security threat facing your systems today, here's why

Feeling swamped by all the different AI agent names? GoDaddy has a new plan for avoiding confusion

Feeling swamped by all the different AI agent names? GoDaddy has a new plan for avoiding confusion

'If someone can inject instructions or spurious facts into your AI’s memory, they gain persistent influence over your future interactions': Microsoft warns AI recommendations are being "poisoned" to serve up malicious results

'If someone can inject instructions or spurious facts into your AI’s memory, they gain persistent influence over your future interactions': Microsoft warns AI recommendations are being "poisoned" to serve up malicious results

WP Engine-Automattic feud resurfaces with new claims of royalty fees and contract threats

Latest in Opinion

WP Engine-Automattic feud resurfaces with new claims of royalty fees and contract threats

Latest in Opinion

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

HP's latest brainwave is renting you a gaming laptop rather than selling it

HP's latest brainwave is renting you a gaming laptop rather than selling it

Apple TV is releasing fewer movies in 2026, despite having a ‘world of explosive action'

Apple TV is releasing fewer movies in 2026, despite having a ‘world of explosive action'

Come on, China – the Range Rover clones have to stop

Come on, China – the Range Rover clones have to stop

From curiosity to culture: Advocating for AI

From curiosity to culture: Advocating for AI

Privacy’s about to become boring, and that’s a good thing (for those that take note)

LATEST ARTICLES

Privacy’s about to become boring, and that’s a good thing (for those that take note)

LATEST ARTICLES- 1GTA 6 arrives in the era of podcasting, and I’m betting it’ll absolutely parody wellness bros, true crime, and Reddit relationship confessions

- 2Scotland vs England Free Streams: How to watch Six Nations 2026 game, TV Channels, Preview for Calcutta Cup

- 3Exclusive: Lego is bringing back the X-1 Ninja Charger for Ninjago’s 15th anniversary — and it hides a motorcycle inside

- 4Apple is rumored to be working on an iPhone Flip as well as an iPhone Fold

- 5A ‘desk yoga’ teacher recommends 3 beginner-friendly exercises for better posture and to undo the damage of gaming and office work