- AI Platforms & Assistants

AI is causing problems and there are warning signs it's going to get worse

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Getty Images)

(Image credit: Getty Images)

- Copy link

- X

- Threads

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Contact me with news and offers from other Future brands Receive email from us on behalf of our trusted partners or sponsors By submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.You are now subscribed

Your newsletter sign-up was successful

An account already exists for this email address, please log in. Subscribe to our newsletterThere's been an endless parade of proclamations over the last few years about an AI golden age. Developers proclaim a new industrial revolution and executives promise frictionless productivity and amazing breakthroughs accelerated by machine intelligence. Every new product seems to boast of its AI capability, no matter how unnecessary.

But that golden sheen has a darker edge. There are more indications that the issues around AI technology are not a small matter to be fixed in the next update, but a persistent, unavoidable element of the technology and its deployment. Some of the concerns are born out of myths about AI, but that doesn't mean that there's nothing to worry about. Even if the technology isn't scary, how people use it can be plenty frightening. And solutions offered by the biggest proponents of AI solutions often seem likely to make things worse.

You may like-

5 AI predictions for 2026

5 AI predictions for 2026

-

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

-

How 5 2025 AI trends affected my life

How 5 2025 AI trends affected my life

1. AI safety experts flee

This month, the head of AI safety research at Anthropic resigned and did so loudly. In a public statement, he warned that “the world is in peril” and questioned whether core values were still steering the company’s decisions. A senior figure whose job was to think about the long term and how increasingly capable systems might go wrong decided it was impossible to keep going. His departure followed a string of other exits across the industry, including founders and senior staff at xAI and other high-profile labs. The pattern has been difficult to ignore.

Resignations happen in tech all the time, of course, but these departures have come wrapped in moral concern. They have been accompanied by essays and interviews that describe internal debates about safety standards, competitive pressure, and whether the race to build more powerful models is outpacing the ability to control them. When the people tasked with installing the brakes begin stepping away from the vehicle, it suggests that the car may be accelerating in ways even insiders find troubling.

AI companies are building systems that will shape economies, education, media, and possibly warfare. If their own safety leaders feel compelled to warn that the world is veering into dangerous territory, that warning deserves more than a shrug.

2. Deepfake dangers

It's hard to argue there's an issue in AI safety when regulators in the United Kingdom and elsewhere find credible evidence of horrific misuse of AI like reports that Grok on X had generated sexually explicit and abusive imagery, including deepfake content involving minors.

Get daily insight, inspiration and deals in your inboxContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Not that deepfakes are new, but now, with the right prompts and a few minutes of patience, users can produce fabricated images that would have required significant technical expertise just a few years ago. Victims have little recourse once manipulated images spread across the internet’s memory.

From an apocalyptic perspective, this is not about a single scandal. It is about erosion. Trust in visual evidence was already fragile. Now it is cracking. If any image can be plausibly dismissed as synthetic and any person can be digitally placed into compromising situations, the shared factual ground beneath public discourse begins to dissolve. The hopeful counterpoint is that regulators are paying attention and that platform operators are being forced to confront misuse. Stronger safeguards, better detection tools, and clearer legal standards could blunt the worst outcomes, but that won't undo the damage already done.

3. Real world hallucinations

For years, most AI failures lived on screens, but that boundary is fading as AI systems are starting to help steer cars, coordinate warehouse robots, and guide drones. They interpret sensor data and make split-second decisions in the physical world. And security researchers have been warning that these systems are surprisingly easy to trick.

You may like-

5 AI predictions for 2026

5 AI predictions for 2026

-

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

-

How 5 2025 AI trends affected my life

How 5 2025 AI trends affected my life

Studies have demonstrated that subtle environmental changes, altered road signs, strategically placed stickers, or misleading text can cause AI vision systems to misclassify objects. In a lab, that might mean a stop sign interpreted as a speed limit sign. On a busy road, it could mean something far worse. Malicious actors could be an emerging threat. The more infrastructure depends on AI decision-making, the more attractive it becomes as a target.

The apocalyptic imagination leaps quickly from there. Ideally, awareness of these weaknesses will drive investment in robust security practices before autonomous systems become ubiquitous. But, if deployment outruns safety, we could all learn some painful lessons in real time

4. Chatbots get a sales team

OpenAI began rolling out advertising within ChatGPT recently, and the response has been mixed, to say the least. A senior OpenAI researcher resigning publicly and arguing that ad-driven AI products risk drifting toward user manipulation probably didn't help calm matters. When a system that knows your fears, ambitions, and habits also carries commercial incentives, the lines between assistance and persuasion blur.

Social media platforms once promised simple connections and information sharing, but their ad-based business models shaped design choices that maximized engagement, sometimes at the cost of user well-being. An AI assistant embedded with advertising could face similar pressures. Subtle prioritization of certain answers. Nudges that align with sponsor interests. Recommendations calibrated not only for relevance but for revenue.

To be fair, advertising does not automatically corrupt a product. Clear labeling, strict firewalls between monetization and core model behavior, and regulatory oversight could prevent worst-case scenarios. Still, that resignation means there's real, unresolved tension in that regard.

5. A growing catalogue of mishaps

There is a simple accumulation of evidence of problems with AI. Between November 2025 and January 2026, the AI Incident Database logged 108 new incidents. Each entry documents a failure, misuse, or unintended consequence tied to AI systems. Many cases may be minor, but every report of AI used fraud or dispensing dangerous advice adds up.

Acceleration is what matters here. AI tools are popping up everywhere, and so the number of problems multiplies. Perhaps the uptick in reported incidents is simply a sign of better tracking rather than worsening performance, but it's doubtful that accounts for everything. A still relatively new technology has a lot of harm to its name.

Apocalypse is a loaded word evoking images of collapse and finality. AI may not be at that point and might never reach it, but it's undeniably causing a lot of turbulence. Catastrophe is not inevitable, but complacency would be a mistake. It might not be the end of the world, but AI can certainly make it feel that way.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

The best business laptops for all budgetsOur top picks, based on real-world testing and comparisons

The best business laptops for all budgetsOur top picks, based on real-world testing and comparisons➡️ Read our full guide to the best business laptops1. Best overall:Dell Precision 56902. Best on a budget:Acer Aspire 53. Best MacBook:Apple MacBook Pro 14-inch (M4)

TOPICS AI Eric Hal SchwartzSocial Links NavigationContributor

Eric Hal SchwartzSocial Links NavigationContributorEric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

View MoreYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more 5 AI predictions for 2026

5 AI predictions for 2026

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

How 5 2025 AI trends affected my life

How 5 2025 AI trends affected my life

Microsoft's warning on 'security implications' of AI agents is causing panic

Microsoft's warning on 'security implications' of AI agents is causing panic

'It's time to demand AI that is safe by design': What AI experts think will matter most in 2026

'It's time to demand AI that is safe by design': What AI experts think will matter most in 2026

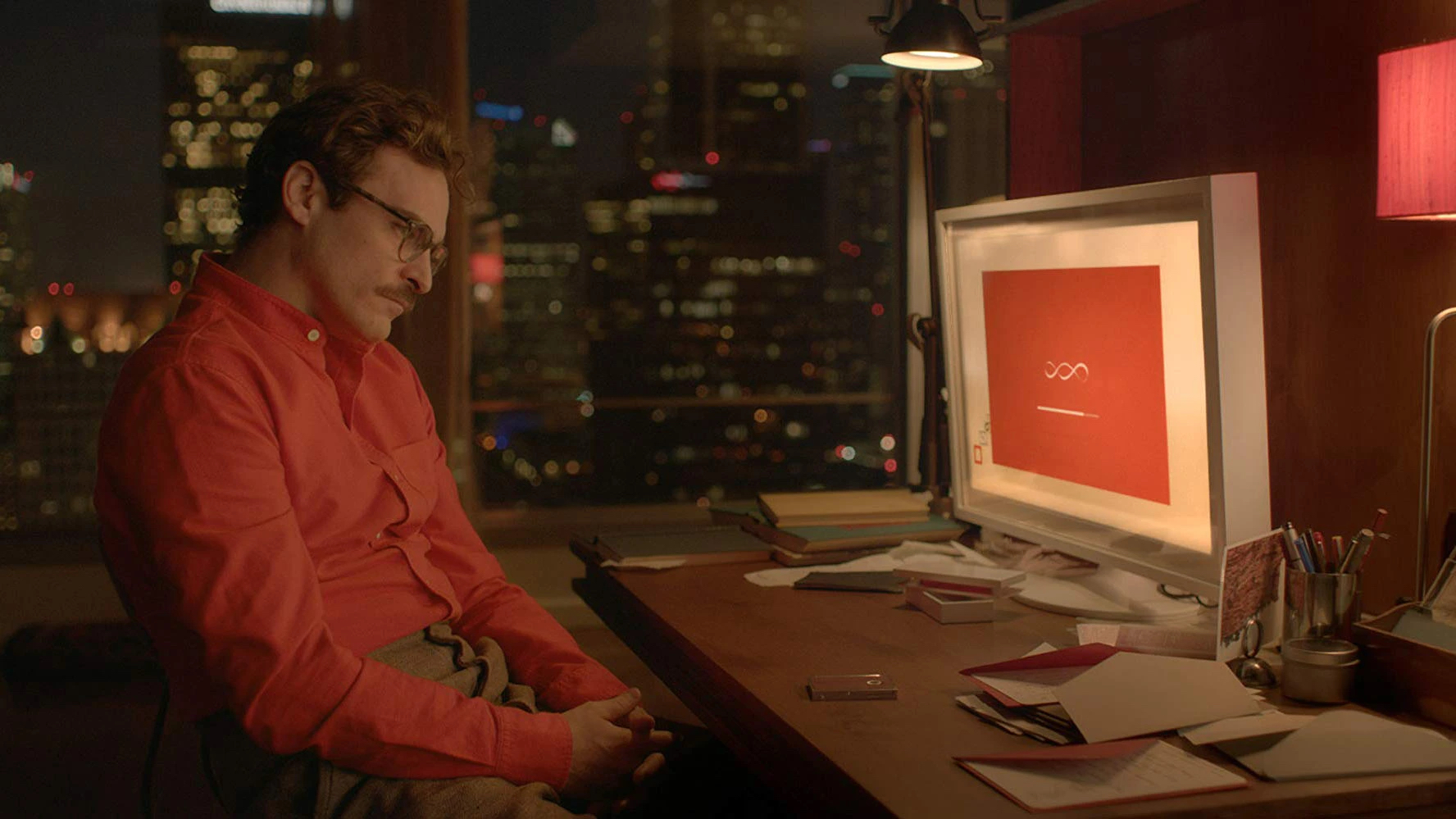

8 movies about our current relationship with AI

Latest in AI Platforms & Assistants

8 movies about our current relationship with AI

Latest in AI Platforms & Assistants

OpenAI has switched off ChatGPT-4o, and angry users want it back

OpenAI has switched off ChatGPT-4o, and angry users want it back

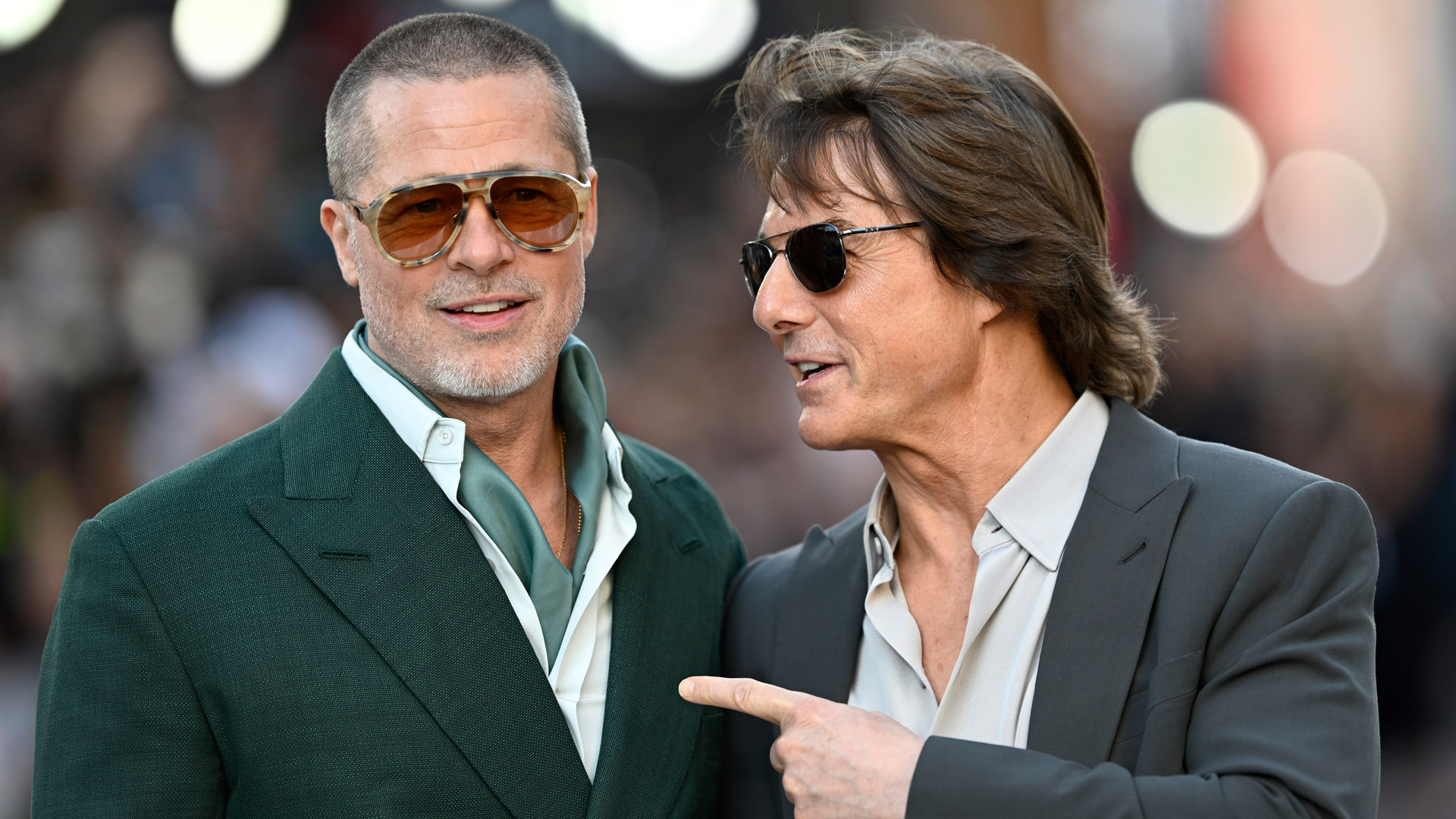

'This is unacceptable' — SAG-AFTRA reacts with outrage to AI-generated Brad Pitt vs. Tom Cruise 'fight' clip

'This is unacceptable' — SAG-AFTRA reacts with outrage to AI-generated Brad Pitt vs. Tom Cruise 'fight' clip

Ouch! 96% of TechRadar readers say they don’t use Apple Intelligence

Ouch! 96% of TechRadar readers say they don’t use Apple Intelligence

ICYMI: the week's 7 biggest tech news stories for February 14, 2026.

ICYMI: the week's 7 biggest tech news stories for February 14, 2026.

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

I tried to tell you about living in AI Time — this essay nails its harsh reality, and here's why we're not truly screwed

Stickerbox is my latest fixation – here's how I got on trying it for the first time

Latest in Features

Stickerbox is my latest fixation – here's how I got on trying it for the first time

Latest in Features

America's Next Top Model Netflix documentary does no favors for Tyra Banks

America's Next Top Model Netflix documentary does no favors for Tyra Banks

5 alarming signs of an AI apocalypse on the way

5 alarming signs of an AI apocalypse on the way

The Arcade2TV-XR is the Meta Quest 3 accessory making me love VR again

The Arcade2TV-XR is the Meta Quest 3 accessory making me love VR again

Fatal Frame 2: Crimson Butterfly Remake’s directors say the game’s take on Japanese horror is ‘both beautiful and terrifying’

Fatal Frame 2: Crimson Butterfly Remake’s directors say the game’s take on Japanese horror is ‘both beautiful and terrifying’

I tested LG G5 OLED TV’s HDR upgrade, and the brightness boost is very real

I tested LG G5 OLED TV’s HDR upgrade, and the brightness boost is very real

5 innovations I want from Android phones

LATEST ARTICLES

5 innovations I want from Android phones

LATEST ARTICLES- 1'The world is in peril' — 5 reasons why the AI apocalypse might be closer than you think

- 2Macclesfield vs Brentford Live Streams: How to watch FA Cup 2025/26 from anywhere in the world

- 3What is the release date for Dove Cameron and Avan Jogia's new Prime Video show 56 Days?

- 4PrivadoVPN’s PhantomMode lands on iOS to stop apps spying on you

- 5Samsung Galaxy Buds 4 leak suggests they could be missing a much-wanted AirPods 4 feature