- Pro

New memory generation targets raising GPU workload demands

When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Samsung)

(Image credit: Samsung)

- Copy link

- X

- Threads

Sign up for breaking news, reviews, opinion, top tech deals, and more.

Contact me with news and offers from other Future brands Receive email from us on behalf of our trusted partners or sponsors By submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.You are now subscribed

Your newsletter sign-up was successful

An account already exists for this email address, please log in. Subscribe to our newsletter- Samsung begins commercial HBM4 shipments as AI memory competition heats up

- HBM4 reaches 11.7Gbps speeds while pushing higher bandwidth and efficiency gains for data centers

- Samsung scales production plans with roadmap extending to HBM4E and custom memory variants

Samsung says it has not only begun mass production of HBM4 memory, but also shipped the first commercial units to customers, claiming an industry first for the new high bandwidth memory generation.

HBM4 is built on Samsung’s sixth generation 10nm-class DRAM process and uses a 4nm logic base die, which reportedly helped the South Korean memory giant reach stable yields without redesigns as production ramped up.

That’s a technical claim which will likely be tested once large scale deployments start and independent performance results appear.

You may like-

Now that's a team-up: Samsung and Nvidia expected to join forces to feature 'revolutionary' HBM4 memory modules in upcoming Vera Rubin hardware

Now that's a team-up: Samsung and Nvidia expected to join forces to feature 'revolutionary' HBM4 memory modules in upcoming Vera Rubin hardware

-

Samsung and Sandisk are set to integrate rival HBF technology into AI products from Nvidia, AMD, and Google within 24 months, and that's a huge deal

Samsung and Sandisk are set to integrate rival HBF technology into AI products from Nvidia, AMD, and Google within 24 months, and that's a huge deal

-

New 'serial' tech will significantly reduce the cost of memory — HBM memory, that is, the sort of RAM only AI hyperscalers can use, but hey, at least they won't go after consumer RAM, or would they?

New 'serial' tech will significantly reduce the cost of memory — HBM memory, that is, the sort of RAM only AI hyperscalers can use, but hey, at least they won't go after consumer RAM, or would they?

Up to 48GB capacity

The new memory reaches a consistent transfer speed of 11.7Gbps, with headroom up to 13Gbps in certain configurations.

Samsung compares that with an industry baseline of 8Gbps, putting HBM3E at 9.6Gbps. Total memory bandwidth climbs to 3.3TB/s per stack, which works out to roughly 2.7 times higher than its earlier generation.

Capacity ranges from 24GB to 36GB in 12-layer stacks, with 16-layer versions coming later. This could increase capacity to 48GB for customers that need denser configurations.

Power use is a key issue as HBM designs increase pin counts, and this generation moves from 1,024 to 2,048 pins.

Are you a pro? Subscribe to our newsletterContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Samsung says it improved power efficiency by about 40% compared with HBM3E via low-voltage through-silicon-via technology and power distribution tweaks, alongside thermal changes that increase heat dissipation and resistance.

“Instead of taking the conventional path of utilizing existing proven designs, Samsung took the leap and adopted the most advanced nodes like the 1c DRAM and 4nm logic process for HBM4,” said Sang Joon Hwang, EVP and Head of Memory Development at Samsung Electronics.

“By leveraging our process competitiveness and design optimization, we are able to secure substantial performance headroom, enabling us to satisfy our customers’ escalating demands for higher performance, when they need them.”

You may like-

Now that's a team-up: Samsung and Nvidia expected to join forces to feature 'revolutionary' HBM4 memory modules in upcoming Vera Rubin hardware

Now that's a team-up: Samsung and Nvidia expected to join forces to feature 'revolutionary' HBM4 memory modules in upcoming Vera Rubin hardware

-

Samsung and Sandisk are set to integrate rival HBF technology into AI products from Nvidia, AMD, and Google within 24 months, and that's a huge deal

Samsung and Sandisk are set to integrate rival HBF technology into AI products from Nvidia, AMD, and Google within 24 months, and that's a huge deal

-

New 'serial' tech will significantly reduce the cost of memory — HBM memory, that is, the sort of RAM only AI hyperscalers can use, but hey, at least they won't go after consumer RAM, or would they?

New 'serial' tech will significantly reduce the cost of memory — HBM memory, that is, the sort of RAM only AI hyperscalers can use, but hey, at least they won't go after consumer RAM, or would they?

The company also points to its manufacturing scale and in-house packaging as key reasons it can meet expected demand growth.

That includes closer coordination between foundry and memory teams as well as partnerships with GPU makers and hyperscalers building custom AI hardware.

Samsung says it expects its HBM business to grow sharply across 2026, with HBM4E sampling planned for later in the year and custom HBM samples set to follow in 2027.

Whether competitors respond with similar timelines or faster alternatives will shape how long this early lead lasts.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

TOPICS Samsung AI Wayne WilliamsSocial Links NavigationEditor

Wayne WilliamsSocial Links NavigationEditorWayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.

View MoreYou must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more Now that's a team-up: Samsung and Nvidia expected to join forces to feature 'revolutionary' HBM4 memory modules in upcoming Vera Rubin hardware

Now that's a team-up: Samsung and Nvidia expected to join forces to feature 'revolutionary' HBM4 memory modules in upcoming Vera Rubin hardware

Samsung and Sandisk are set to integrate rival HBF technology into AI products from Nvidia, AMD, and Google within 24 months, and that's a huge deal

Samsung and Sandisk are set to integrate rival HBF technology into AI products from Nvidia, AMD, and Google within 24 months, and that's a huge deal

New 'serial' tech will significantly reduce the cost of memory — HBM memory, that is, the sort of RAM only AI hyperscalers can use, but hey, at least they won't go after consumer RAM, or would they?

New 'serial' tech will significantly reduce the cost of memory — HBM memory, that is, the sort of RAM only AI hyperscalers can use, but hey, at least they won't go after consumer RAM, or would they?

Arm's owner and Intel say their Z-Angle Memory, HBM-rival, will hit the market in 2029 - but I hope it won't be another Optane/3D-Xpoint, which caused Micron to lose millions

Arm's owner and Intel say their Z-Angle Memory, HBM-rival, will hit the market in 2029 - but I hope it won't be another Optane/3D-Xpoint, which caused Micron to lose millions

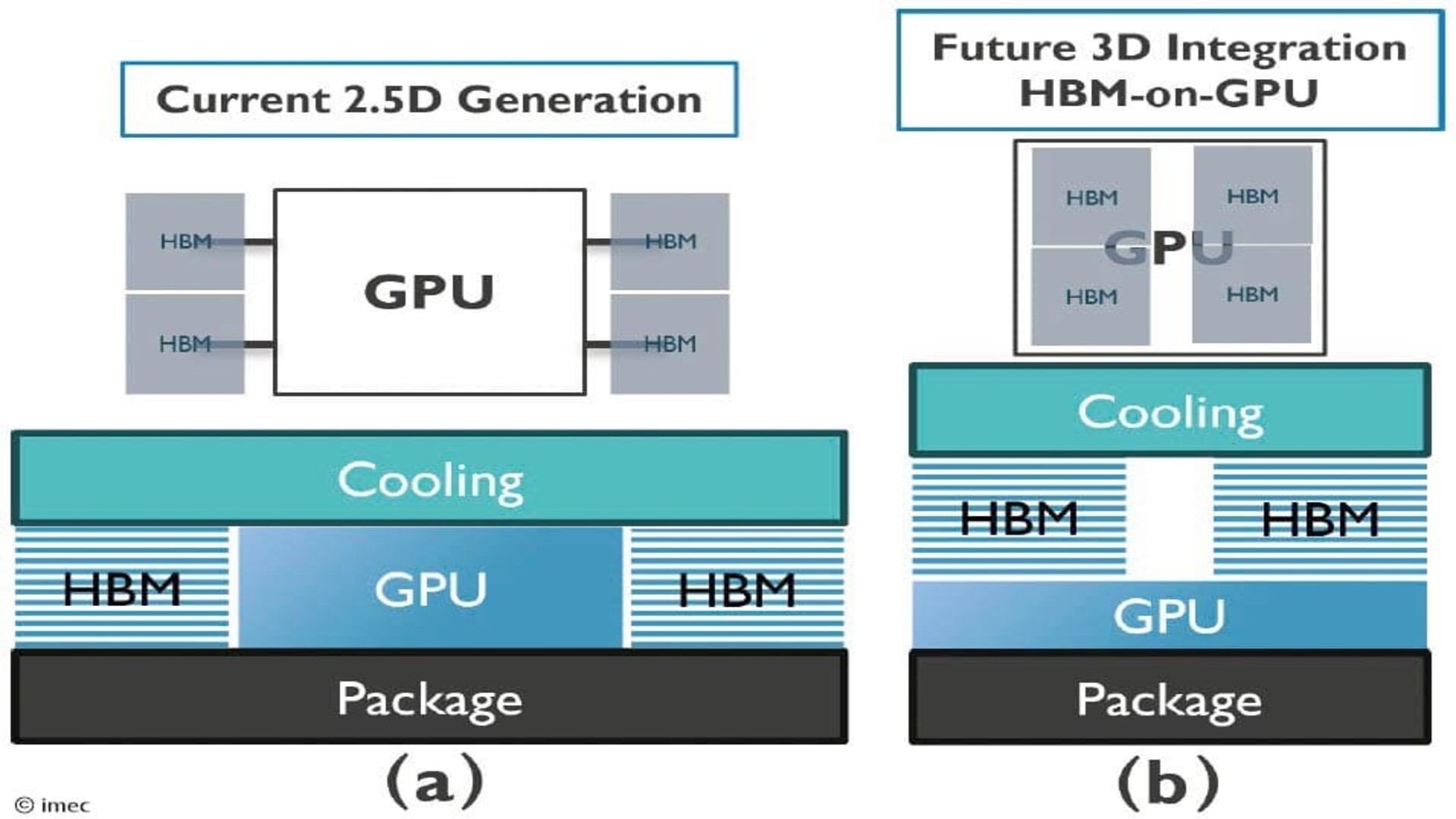

HBM-on-GPU set to power the next revolution in AI accelerators - and just to confirm, there's no way this will come to your video card anytime soon

HBM-on-GPU set to power the next revolution in AI accelerators - and just to confirm, there's no way this will come to your video card anytime soon

Samsung RAM prices have doubled — and the worst is yet to come

Latest in Pro

Samsung RAM prices have doubled — and the worst is yet to come

Latest in Pro

Dynabook Tecra A65-M business laptop review

Dynabook Tecra A65-M business laptop review

Forget zero-days - 'N-days' could be the most worrying security threat facing your systems today, here's why

Forget zero-days - 'N-days' could be the most worrying security threat facing your systems today, here's why

Feeling swamped by all the different AI agent names? GoDaddy has a new plan for avoiding confusion

Feeling swamped by all the different AI agent names? GoDaddy has a new plan for avoiding confusion

'If someone can inject instructions or spurious facts into your AI’s memory, they gain persistent influence over your future interactions': Microsoft warns AI recommendations are being "poisoned" to serve up malicious results

'If someone can inject instructions or spurious facts into your AI’s memory, they gain persistent influence over your future interactions': Microsoft warns AI recommendations are being "poisoned" to serve up malicious results

WP Engine-Automattic feud resurfaces with new claims of royalty fees and contract threats

WP Engine-Automattic feud resurfaces with new claims of royalty fees and contract threats

How to finally operationalize Agentic AI and realize its full potential

Latest in News

How to finally operationalize Agentic AI and realize its full potential

Latest in News

'This is unacceptable' — SAG/AFTRA reacts to the viral Seedance 2.0 AI-generated Pitt-Cruise fight

'This is unacceptable' — SAG/AFTRA reacts to the viral Seedance 2.0 AI-generated Pitt-Cruise fight

Jon Favreau says The Mandalorian & Grogu had to “up our game” for theaters

Jon Favreau says The Mandalorian & Grogu had to “up our game” for theaters

There's a sneaky way to watch NBA All star Weekend for FREE

There's a sneaky way to watch NBA All star Weekend for FREE

007 First Light gets new story trailer ahead of its May launch, showcasing a charming James Bond in his early years as a young recruit, new characters, and even more explosions

007 First Light gets new story trailer ahead of its May launch, showcasing a charming James Bond in his early years as a young recruit, new characters, and even more explosions

The latest Control Resonant gameplay trailer showcases new zones, abilities, and progression, which Remedy says 'is all about building your perfect combat flow'

The latest Control Resonant gameplay trailer showcases new zones, abilities, and progression, which Remedy says 'is all about building your perfect combat flow'

Micro Four Thirds has a mysterious new member – will it revitalize or kill the system?

LATEST ARTICLES

Micro Four Thirds has a mysterious new member – will it revitalize or kill the system?

LATEST ARTICLES- 1'Oura’s long‑term vision is to help shift health systems' — Oura's chief medical officer tells us how it's using smart rings to shape US legislation

- 2Your Roku just got yet more free streaming channels, including a Pokémon channel — but you should expect to see a bunch of AI ads on there in the future

- 3'This is unacceptable' — SAG/AFTRA reacts to the viral Seedance 2.0 AI-generated Pitt-Cruise fight

- 4The MG S5 is the SUV follow-up to one of the best EVs on the road – but is it anywhere near as charming?

- 5China has extracted 1000g of uranium from just seawater - targets "unlimited battery life" by 2050, tapping into 4.5 billion tons of aquatic uranium